Student Questionnaire: This questionnaire collects information about learners related to their attitudes towards maths and science, as well as their school experiences and home environments.

Teacher Questionnaire: The mathematics and science teachers for each of the sampled classes are required to answer questions regarding their experience, views and attitudes towards the teaching and learning of their respective subjects within the classroom environment, as well as the topics taught in each subject and classroom practices.

School Questionnaire: This questionnaire is administered to the principals of the participating schools. Questions relate to school characteristics, instructional time, resources and technology, parental involvement, school climate for learning, teachers, the role of the principal, and learners’ school readiness.

Home questionnaire: In 2015, a home questionnaire was introduced for the fourth grade (fifth grade in South Africa) assessment. This questionnaire was administered to the parents of caregivers of each learner and collected information about learner’s home backgrounds and early learning experiences.

Schools and learners are sampled by the IEA using a rigorous sampling methodology, and individual schools therefore cannot sign up to participate.

Direct comparisons between the scores for each grade should not be made. The scaling of TIMSS data is conducted separately for each grade and each content domain. Although the scales were created to each have a mean of 500 and a standard deviation of 100, the subject matter and the level of difficulty of items is different in the assessment for each grade.

The student questionnaires are administered to learners at school after they have completed the mathematics and science assessments.

The teacher and school questionnaires are completed by the mathematics and science teachers, and the school principals during the testing time for the learners. These are then collected by the test administrators before they leave the school.

The home questionnaire, introduced in 2015, was sent home with learners before the day of testing and learners were required to return the completed questionnaires on the day of assessment.

The TIMSS International Databases are available to all individuals interested in the data collected and analysed as part of TIMSS since 1995. The aim is to support and promote the use of these data by researchers, analysts, and others interested in improving education. These databases are available here.

In order to perform statistical analyses using the TIMSS data, you will need to download the IEA IDB Analyzer software. Used in conjunction with SPSS, the IEA IDB Analyzer allows you to merge the various data file types of the TIMSS database. The software generates SPSS syntax that takes into account information from the sample design in the computation of statistics and their standard errors. In addition, the generated SPSS syntax makes use of plausible values for calculating estimates of achievement scores and their standard errors, combining both sampling variance and imputation variance.

TIMSS selects a random sample of learners that represents the full population of learners in each target grade to provide valid estimates of learner achievement and characteristics.

The sample must consist of a minimum of 150 schools per grade, with a minimum of 4,000 learners assessed per grade. The school response rate target is 85 percent for all countries. In order for a country’s data to be included in the international database, a minimum participation rate of 50 percent of schools from the original sample of schools is required. In addition, there is a response rate target for classrooms of 95 percent, and a target learner response rate of 85 percent, from both original and substitute schools.

Once the 50 percent minimum participation rate of original school sampling is reached, countries may use substitute schools to increase the response rate. Substitute schools may be used when an original sampled school refuses to participate. Substitute schools are chosen from the two schools neighbouring the sampled school in the frame, and these schools must have similar demographic characteristics to the sampled school.

The IEA applied explicit and implicit stratified random sampling techniques for South Africa by using the province, school type (public and independent) and language of learning and teaching (Afrikaans, English and dual medium) as stratification variables. A further random selection process of classes is applied for each sampled school.

The sampling strategy changed slightly in 2019, and the language of learning and teaching was not included as a stratification variable. At the second stage of sampling, an intact class is randomly selected in each sampled school.

The number of countries that participate varies in each round of TIMSS. Follow this link for a list of all participating countries in each round.

| Assessment year | Grade tested | Number of participating schools | Number of participating learners |

| 1995 | Grade 8 | 114 | 4 491 |

| 1999 | Grade 8 | 194 | 8 147 |

| 2003 | Grade 8 | 225 | 8 952 |

| Grade 9 | 238 | 4 261 | |

| 2011 | Grade 9 | 285 | 10 085 |

| 2015 | Grade 5 | 297 | 11 903 |

| Grade 9 | 292 | 12 514 | |

| 2019 |

Grade 5 | 297 | 11 903 |

| Grade 9 | 520 | 20 829 |

TIMSS was first administered in 1995, and operates on a four year cycle. South Africa did not participate in TIMSS 2007 as we wanted to allow time for the many educational interventions that were being implemented, to improve mathematics and science, to take effect before testing again. Countries in the Northern Hemisphere conduct the assessment between April and June of the assessment year, while countries in the Southern Hemisphere conduct the assessment in October and November of the assessment year. In both hemispheres the assessment is conducted near the end of the school year.

TIMSS estimates that within a 4-year testing cycle a country could improve by a maximum of up to 40 points (i.e. one grade level).

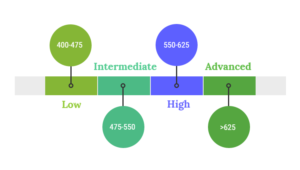

The TIMSS achievement results are summarized using item response theory (IRT) scaling and reported on 0 to 1,000 achievement scales. TIMSS mathematics and science achievement items are designed to measure learner knowledge and proficiency which could range quite widely from one learner to another. TIMSS has created a set of international benchmarks to provide countries with more meaningful descriptions of what learners know. TIMSS defines four categories of benchmarks namely: 400 (achieving at a low level), 475 (achieving at Intermediate level), 550 (High level) and 625 (Advanced level) score points. With each successive assessment, TIMSS works with the Science and Mathematics Item Review Committee (SMIRC) to conduct a scale anchoring analysis to describe student competencies at the benchmarks.

TIMSS scale scores range from 0 to 1 000 (although learner performance typically ranges between 300 and 700). The TIMSS achievement scales were established in TIMSS 1995 based on the achievement distribution across all participating countries, treating each country equally. The scale centerpoint of 500 was set to correspond to the mean of the overall achievement distribution, and 100 points on the scale was set to correspond to the standard deviation. Achievement data from subsequent TIMSS assessment cycles were linked to these scales so that increases or decreases in average achievement may be monitored across assessments.

Trend studies gather data that allow for examining change over time within different samples of the same population at different points in time. TIMSS includes a set of trend items that are repeated from one cycle to another which allows for linking between assessment cycles. The previous cycle (for example 2015) is then placed on the same scale of the results from the current cycle (2019).

| Mathematics | |

| Grade 4 | 1. Numbers

2. Geometric shapes and measures 3. Data display |

| Grade 8 | 1. Numbers

2. Algebra 3. Geometry 4. Data and chance |

| Grade 12 | 1. Algebra

2. Calculus 3. Geometry |

| Science | |

| Grade 4 | 1. Life science

2. Physical science 3. Earth science |

| Grade 8 | 1. Biology

2. Chemistry 3. Physics 4. Earth science |

| Grade 12 | 1. Mechanics and thermodynamics

2. Electricity and magnetism 3. Wave phenomena and atomic/nuclear physics |

Every statistical value based on a sample has some uncertainty. The confidence interval is one way of conveying our uncertainty about a parameter such as for instance a mean. We also have to say something about how far such an estimator is likely to be from the true parameter value. With a confidence interval, we report a range of numbers, in which we hope the true parameter will lie. The interval is centered at the estimated value, and the width (“margin of error”) is an appropriate multiple of the standard error.

Standard error is a statistical term that measures the accuracy with which a sample represents a population. A standard error is the standard deviation of the sampling distribution of a statistic. In statistics, samples mean deviates from the actual mean of a population; this deviation is the standard error.

The standard error of the mean (SEM) measures how far the sample mean (average) of the data is likely to be from the true population mean. The SEM is always smaller than the standard deviation (SD).

An estimate is a value (achievement score or percentage of learners) that is inferred for the whole Grade 9 learner population based on the data collected from a sample of Grade 9 learners. Estimation is a process that systematically adjusts the sample data to determine an estimated value for the population.

According to the South African Schools Act 1996, the state is required to fund all public schools and the National Norms and Standards for School Funding (NNSSF) policy provides a quintile ranking mechanism to address equity in schools. Quintile 1 is the group of schools in each province catering for the poorest 20% of learners. Quintile 2 schools cater for the next poorest 20% of schools, and so on. Quintile 5 schools are those schools that cater for the least poor 20% of learners. Poor schools ranked Quintile 1, 2 and 3 are declared no-fee schools and are allocated a higher state subsidy than the affluent schools that are declared Quintile 4 and 5.

The standard deviation (SD) measures the amount of variability, or dispersion, from the individual data values to the mean.

The standard deviation provides information regarding the shape of the distribution, how close the individual data values are from the mean value. A low standard deviation means that most of the numbers are very close to the average. A high standard deviation means that the numbers are spread out.

The Trends in International Mathematics and Science Study (TIMSS) is an international assessment of the mathematics and science knowledge of fourth grade and eighth grade learners around the world.

For TIMSS 2019, results are scheduled to be released at the end of 2020. The next cycle of TIMSS is scheduled to be administered in 2023, with results to be expected at the end of the following year.

Follow this link to access the TIMSS SA website where you will find national reports, highlights documents, policy briefs and other TIMSS related publications.

TIMSS assesses the achievement of learners at three stages of their education. At the primary school level, TIMSS assesses learners in either the 4th, 5th or 6th grade. At the lower secondary school level, learners in 8th or 9th grade are assessed. Since 1995 South Africa has participated only at the lower secondary level. In 2015, South Africa participated at the primary school level for the first time in TIMSS Numeracy. For TIMSS 2019, grade 5 learners completed both the mathematics and science assessments.

The TIMSS national study was funded by the Department of Basic Education in South Africa. In 2019, the two provincial studies for Gauteng and the Western Cape were funded by the Gauteng Department of Education, and the Western Cape Department of Education, respectively.

TIMSS was developed by the International Association for the Evaluation of Educational Achievement (IEA) to allow participating nations to compare learners’ educational achievement across borders. The IEA is an independent international cooperative of national research institutions and government agencies, with nearly 70 member countries worldwide.

The IEA contracts with the TIMSS & PIRLS International Study Center at Boston College to lead the design and implementation of TIMSS. The TIMSS & PIRLS International Study Center works with country representatives, called National Research Coordinators, to design and implement TIMSS, assure quality control and international comparability, and report results.

In South Africa, the Human Sciences Research Council (HSRC) hosts the office of the National Research Coordinator, and the organisation is responsible for administering TIMSS.

TIMSS is designed to align broadly with mathematics and science curricula in the participating countries. The results, therefore, suggest the degree to which learners have learned mathematics and science concepts and skills likely to have been taught in school.

In 1995 and 1999, the survey targeted learners in Grade 8, the first year of secondary school. The 2002 study included both Grade 8 and Grade 9 learners in its sample, while 2011 and 2015 studies were undertaken at the Grade 9 level. The reason for changing the testing grade from Grade 8 to Grade 9 was because of South Africa’s overall low performance in the previous rounds of the study. A shift from testing Grade 8 learners to testing Grade 9 learners was judged to enable a better match between the content knowledge presented to learners in TIMSS and the curriculum coverage in South Africa.

Participating in TIMSS provides important information for policy makers about the state of mathematics and science education, and what is required for improving the quality of education. The assessment results therefore provide the data necessary for monitoring and attempting to improve the health of a country’s basic education system.

TIMSS is a widely recognised international testing programme aimed largely at assessing whether countries are making educational progress over time. TIMSS therefore allows for trend analysis of the results which gives countries an insight into whether or not mathematics and science education is improving.

The effective educational development of young people requires the building blocks of critical thinking and reasoning. Learner performance in maths and science therefore provides important indicators of the health and effectiveness of a country’s education system.

The random selection process used by the IEA ensures that schools with varying demographics and locations are chosen so that the South African sample is representative of the overall South African school population. This is important to ensure that a country’s sample accurately reflects its schools.